Http/2 is a major revision of HTTP, which improves the performance of the web. It uses primary protocol and multiplexing.

What are the primary goals of Http/2 ?

- Reduce latency.

- Minimise protocol overhead.

- Adds support for request prioritisation and server push.

Let me throw a quick insight on how the HTTP1.1 works, before we jump into the working of HTTP/2.

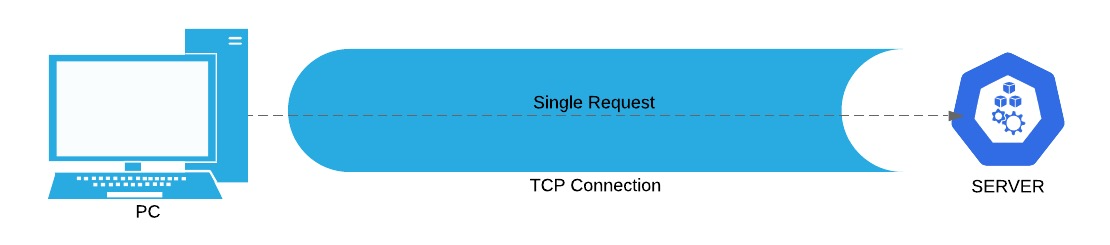

HTTP1.1 is built on HTTP protocol which is a simple request / response system. Here the client and server establish a TCP connection, which is a stateful communication. It allows us to send one request at a time and that channel is busy fetching the response. This socket is purely under-utilised. Once the request is made, you cannot send any other request until you get back the response. Because of this underutilisation , it is obviously slow.

OK HTTP/1.1 had performance limitations , ok ! What came next ? it’s SPDY, let’s see how exactly it was SPDY?

This single TCP connection allowing only one request/response at a time is very expensive. There are resource descriptors held on the server and there is also a lot of memory wastage. We can do so much with a single TCP connection, this is when google realised it and developed SPDY, as an experimental protocol. It was announced in the mid-2009, whose primary goal was to reduce latency and address the performance limitations of HTTP/1.1.

What do you think HTTP Working group (HTTP-WG) did next ?

After observing the SPDY trend, they dissected SPDY. On the basis of their learning from SPDY, they improved and built out an official standard – HTTP/2.

Interesting history isn’t it. Com’on , let’s move on to the working of HTTP/2 ?

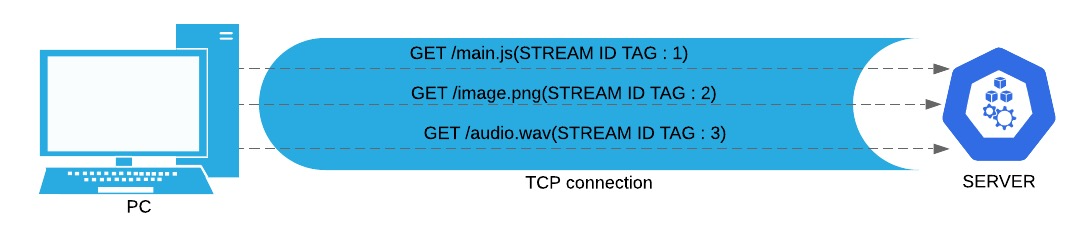

HTTP/2 works very similarly from the client perspective. We can still make GET requests, POST requests etc, and also we are still using a single TCP connection. But here we ended up using this single TCP connection, very efficiently. The client in this scenario , shoves as many requests at the same time into this pipe .This is called multiplexing.

Alright!, you may ask next how does the server know , which request is for what? And also how does the client know when it gets a response, that this response was gotten for which request ?

Let me dive into this, how is this confusion resolved ? This is where the stream ID tag is used , this is very internal that we do not see as clients of HTTP2. Every time a request is made with HTTP/2, every request is tagged with a stream ID. When the server returns back the response, every response gets tagged back with its respective stream ID. This is how the client will understand which response belongs to which request. This is multiplexing. Here since every request has an internal ID, here we can compress headers and the data.

Whoa!, That clears the confusion.

What else has the HTTP/2 to offer ?

HTTP/2 allows the server to push multiple responses for a single request. But we must ensure our Client is configured for this setup using HTTP2. This at times can be an advantage or disadvantage, solely depending on what’s the use case.

Pros of HTTP2

Yes , if you have read so far, you must obviously know , Its Multiplexing over a single TCP connection. You can make huge set of requests can be GET, POST, PUT list goes on.

Next, is compressing, This also we have understood why!, Let me re-iterate it , here we can compress the headers and data , because each request has an internal stream ID tag attached to it.

Moving on to the next amazing feature which is server push, This is where your client makes one request , but gets multiple responses (This can be disadvantageous as well , i discuss about this in the later section of this article).

It is always Secure, now the consensus of the HTTP2 is always secure, we cannot do a HTTP2 on a port 80, it has to be 443. Hence, it’s secure all the time. It’s a design decision, and it does have a benefit , so by design itself it is secure.

Protocol negotiation during TLS , Well How will your client know? That Server supports HTTP/2 ? During TLS, the server negotiates with the client in the same handshake and says I support this, do you also support it? While they are doing their encryption.

Cons of HTTP2

When configured incorrectly, server push can be abused. Basically, here the server will end up pushing multiple resources to the client, irrespective of whether the client needs it or not. This will obviously cause an extra unnecessary bandwidth to be consumed. If this is done again and again, this will saturate the network.

Another one would be slower in the mixed mode . (Hey! What’s mixed mode ? – backend is HTTP/1.1 but the load balancer is HTTP/2 or vice versa).

HTTP/2 – The trump card of gRPC

gRPC uses HTTP/2 as its transfer protocol. It inherits some great features of HTTP/2. Such as,

- Binary framing

- HTTP/2 compresses the headers using HPACK, which will reduce overhead cost and also improves performance.

- Multiplexing – as discussed already, the client and server can send multiple requests in parallel over a single TCP connection.

- Server Push.